|

I am a cofounder and research scientist at Black Forest Labs. I obtained my PhD and Master from the University of Waterloo, and a Bachelor of Science from the Technical University of Munich. My main research interest is generative modeling, with a focus on diffusion models. Email / Google Scholar / Twitter / Github |

|

|

|

|

Axel Sauer, Frederic Boessel, Tim Dockhorn, Andreas Blattmann, Patrick Esser, Robin Rombach SIGGRAPH Asia, 2024 arXiv We present a novel distillation method called Latent Adversarial Diffusion Distillation (LADD) and distill Stable Diffusin 3 down to one to four inference steps. |

|

Patrick Esser, Sumith Kulal, Andreas Blattmann, Rahim Entezari, Jonas Müller, Harry Saini, Yam Levi, Dominik Lorenz, Axel Sauer, Frederic Boesel, Dustin Podell, Tim Dockhorn, Zion English, Kyle Lacey, Alex Goodwin, Yannik Marek, Robin Rombach ICML, 2024 (Best Paper Award) arXiv We present Stable Diffusion 3 an improved version of Stable Diffusion using a novel multimodal Diffusion Transformer architecture. |

|

Andreas Blattmann*, Tim Dockhorn*, Sumith Kulal*,Daniel Mendelevitch, Maciej Kilian, Dominik Lorenz, Yam Levi, Zion English, Vikram Voleti & Adam Letts & Varun Jampani, Robin Rombach arXiv, 2023 code / weights (svd) / weights (svd-xt) We present Stable Video Diffusion,a latent video diffusion model for high-resolution, state-of-the-art text-to-video and image-to-video generation. We demonstrate the necessity of a well-curated pretraining dataset for generating high-quality videos and present a systematic curation process to train a strong base model, including captioning and filtering strategies. |

|

|

Dustin Podell, Zion English, Kyle Lacey, Andreas Blattmann, Tim Dockhorn, Jonas Müller, Joe Penna, Robin Rombach ICLR, 2024 (Spotlight Presentation) arXiv / code / weights / refiner weights We present SDXL an improved version of Stable Diffusion using several conditioning tricks and multi-resolution training. We also release a refiner model to even further improve visual fidelity. |

|

Tim Dockhorn, Tianshi Cao, Arash Vahdat, Karsten Kreis TMLR (2835-8856), 2023 arXiv / project page / code / twitter We train diffusion models with strict differential privacy guarantees and outperform previous methods by large margins. |

|

Andreas Blattmann*, Robin Rombach*, Huan Ling*, Tim Dockhorn*, Seung Wook Kim, Sanja Fidler, Karsten Kreis CVPR, 2023 arXiv / project page / twitter We take Stable Diffusion, insert additional temporal layers and fine-tune them on video data while keeping the spatial layers fixed. |

|

|

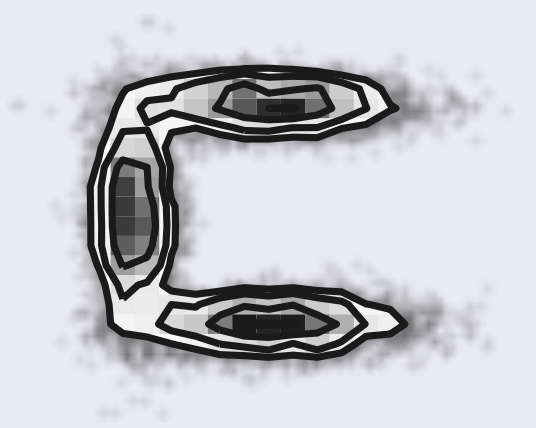

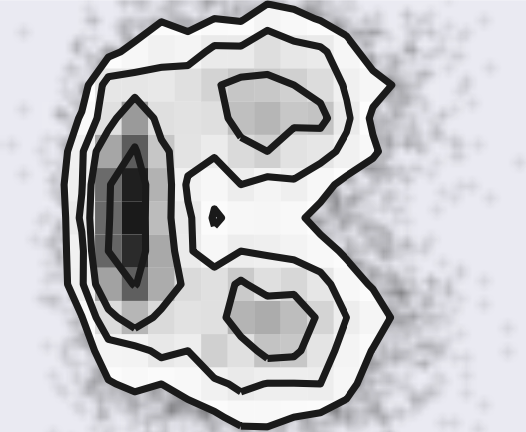

Karsten Kreis*, Tim Dockhorn*, Zihao Li, Ellen Zhong Machine Learning for Structural Biology Workshop, NeurIPS, 2022 (Oral Presentation) arXiv / twitter We train diffusion models over molecular confirmations from cryo-EM imaging data. |

|

|

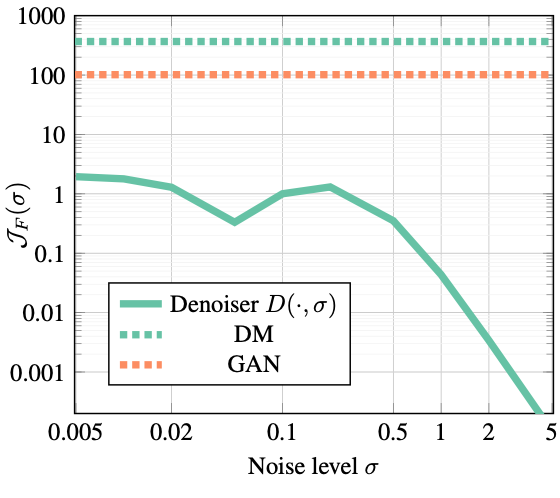

Tim Dockhorn, Arash Vahdat, Karsten Kreis NeurIPS, 2022 arXiv / project page / video / code / twitter GENIE distills higher-order score terms into a small neural network and uses them for accelerated diffusion model sampling. |

|

|

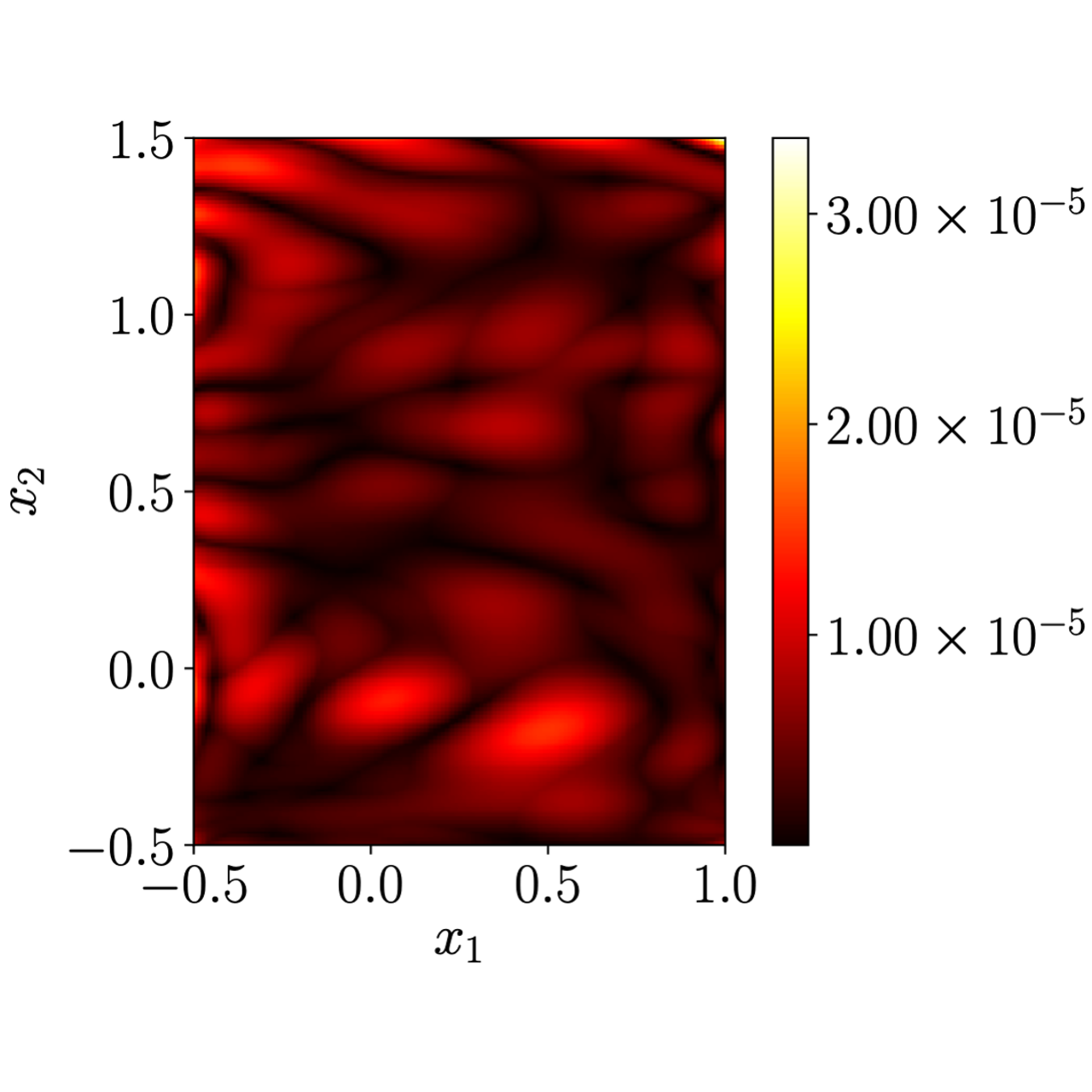

Tim Dockhorn, Arash Vahdat, Karsten Kreis ICLR, 2022 (Spotlight Presentation) arXiv / project page / video / code / twitter We propose a novel diffusion using auxiliary velocity variables for more efficient denoising and higher quality generative models. |

|

|

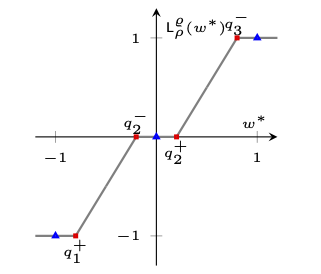

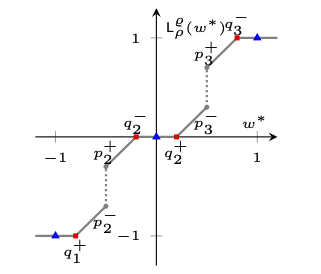

Tim Dockhorn, Yaoliang Yu, Eyyüb Sari, Mahdi Zolnouri, Vahid Partovi Nia NeurIPS, 2021 arXiv / video / twitter We show that proximal maps can serve as a natural family of quantizers that is both easy to design and analyze, and we propose ProxConnect as a generalization of the widely-used BinaryConnect. |

|

Tim Dockhorn*, James Ritchie*, Yaoliang Yu, Iain Murray INNF+: Invertible Neural Networks, Normalizing Flows, and Explicit Likelihood Models, ICML, 2020 arXiv / video / code / twitter We denoise distributions using Normalzing Flows in conjuction with amortized variational inference. |

|

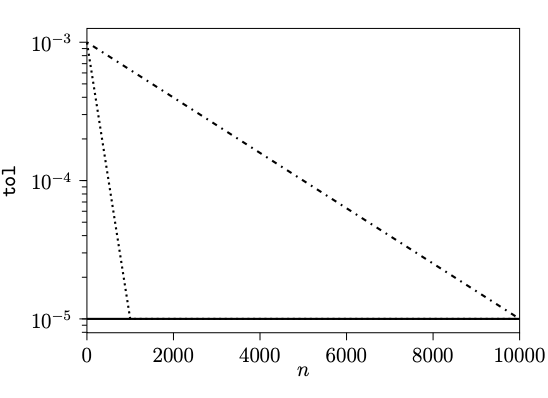

Tim Dockhorn Master's Thesis, 2017 thesis I give a comprehensive and self-contained introduction to continuous Normalizing Flows, and show that their training can be accelerated using tolerance schedulers. |

|

Tim Dockhorn arXiv, 2019 arXiv / code / twitter I show that small neural networks (less than 500 parameters) can learn solutions of complex PDEs when optimized with BFGS. |

|

Tim Dockhorn Bacherlor's Thesis, 2017 thesis I test a variety of tubulence models within a high-order discontinuous Galerkin method. |

|

|

|

IWR Colloquium, 11.01.2023 slides Hosted by Fred Hamprecht. |

|

Google Tech Talks: Differential Privacy for ML, 12.04.2023 slides Hosted by Thomas Steinke. |

|

Source code credit to Jon Barron |